More convincing scams, fake nudes: The stakes are getting higher in the war against deepfake abuse

Individuals, businesses and governments will all have to change the way they interact with video conferencing calls, images and more, as nefarious actors abuse the technology that is generating increasingly realistic content.

(Illustration: Â鶹´«Ã½/Nurjannah Suhaimi)

This audio is generated by an AI tool.

Many of us would have come across in recent years photos of people with three arms, six fingers or eyes looking in two separate directions â generative artificial intelligence (AI) has been a source of much laughter with its failed attempts at recreating reality.

Deepfake technology, too, was simple fun for some people, whenÌıthey could use pictures of friends or family membersÌıand insert them into famous movie scenes or make them âsayâ ridiculous phrases, among other things.Ìı

Lately, however, the trend has taken a much darker turn, with nefarious actors weaponising the technology and using deepfakes for crime and to hurt others.Ìı

Just last month, Singapore's Transport Minister Chee Hong Tat and Mr Edwin Tong, Minister for Culture, Community and Youth, were among more than 100 public servants hereÌısent extortion notes with âcompromisingâ deepfake images.

Overtly accessible deepfake tools have made it easy for anyone to create non-consensual sexual images of others. Earlier last month, Â鶹´«Ã½ reported that the police were investigating deepfake nude photos of several Singapore Sports School students that were created and shared by other students.

Experts say more âÌıand worse âÌıare likely to come.

Mr Vinod Shankar, the security lead for Southeast Asia at Accenture, said that threat actors, including scammers, are investing more into high-quality deepfakes. The IT services and consulting firm found that such videos can cost up to US$20,000 (S$27,250) a minute.Ìı

The rapid advancement of generative AI technology could also propel the dangers of deepfakes, experts warned. Just this month, both OpenAI â the parent company of ChatGPT â and Google released text-to-video generators that allow users to create hyper-realistic videos.

Both platforms have recognised the dangers that their software might bring in the wrong hands and have said that there are safeguards in place.

Open AIâs Sora Turbo has limited the uploading of images of people onto its platform to mitigate deepfakes.Ìı

Googleâs Veo 2, which is open to only a small group of users, produces videos with an invisible SynthID watermark that âhelps identify them as AI-generated, helping reduce the chances of misinformation and misattributionâ.

Not all deepfake software has such safeguards though.Ìı

Cybersecurity experts said the advancements in deepfake technology will eventually make it impossible for the naked eye to distinguish deepfakes. Worse yet, the technology will become even more pervasive such that anyone could make a deepfake, be it for good or bad.

This means that deepfakes could be used not just to manipulate or harm people, theyÌımight be able to âdestabilise trust at all levels: Personal, societal and institutionalâ, one academic from the Singapore University of Technology and Design (SUTD) said.

Assistant Professor Roy Lee whoÌıteaches under the information systems technology and design programmeÌısaid: âAs deepfakes become harder to detect, they could make people question the authenticity of all digital content, leading to a âcrisis of truthâ.â

WHY DEEPFAKES ARE BECOMING MORE ADVANCED

Deepfake technology is advancing because it is feeding on and being trained by a fast-growing amount ofÌıdata such as pictures and videos.

The images, videos and sound clips generated by AI will thus become increasingly realistic.

Asst Prof Lee said that developments in AI deep-learning models, which allow such technology to learn, and an increase in computing power have enabled deepfake programmes to process and generate more realistic deepfake content as well.

He added that these developments are spurred by a growing demand for hyper-realistic AI-generated media in several industries such as entertainment.Ìı

Mr Vinod from AccentureÌısaid that another source of demand is the black market.

Researchers from his firm found a 223 per cent increase in deepfake-related tools being traded on dark-web forums between the first quarter of 2023 and first quarter ofÌı2024.

âThe financial incentives from creating deepfake content fuel a vicious circle of supply and demand, driving the evolution of these threats,â heÌıadded.

Dealing with deepfakes is not as simple as a blanket ban, because the technology has its advantages.

Mr Emil Tan, director and co-founder of Infosec in the City, an international cybersecurity network that runs annual cybersecurity conference SINCON, said that deepfake technology could be used for education and training purposes, such as having an AI-generated tutor that can adapt to a learnerâs needs.

âIn healthcare and accessibility, deepfake technology helps create synthetic faces and voices for people who have lost the ability to speak,â he added, citing voice banking as an example.

BUSINESSES, FINANCIAL INSTITUTIONS AT RISK

As deepfake technology integrates with other AI advancements such as generative models for text and voice, it could enable "multi-modal" attacks that combine fake visuals, speech and context to create highly convincing fabrications, Asst Prof Lee said.

The Monetary Authority of Singapore (MAS) said that deepfakes pose risks in three areas:

- Compromising biometric authentication such as facial recognition

- Facilitating social engineering techniques for phishing and scams

- Propagating misinformation or disinformation

Several businesses globally have already fallen for scams using deepfakes.

A financial worker in a multi-national corporation in Hong Kong transferred more than US$25 million to scammers after they used deepfake technology to pose as the companyâs chief financial officer and other colleagues in a video-conferencing call.

Financial institutions in Hong Kong approved US$25,000 worth of fraudulent loans in August 2023, after a syndicate used eight stolen identity cards to make loan applications and bank account registrations and used deepfakes to bypass facial recognition verification steps.

Mr Steve Wilson, chief product officer at cybersecurity firm Exabeam, said that what adds to the dangers of deepfakes is how people often think that "seeing is believing", so deepfakes can bypass natural scepticism.

âPeople trust video; it's visceral, it's emotional,â he added. His firm has predicted that video deepfakes would become more pervasive in 2025.

Without a doubt, financial and banking sectors are the main targets for deepfake scams.Ìı

Mr Shanmuga Sunthar Muniandy, director of architecture and chief evangelist for Asia Pacific at dataÌımanagement provider Denodo, said: âThe banking sector has been undergoing extensive digital transformation in the past few years, but having so much of banking processes existing online now means that hackers find it easier than ever to impersonate individuals to cheat consumers or businesses out of substantial amounts of money.âÌı

He pointed out that accounting firm Deloitteâs Center for Financial Services hadÌıpredicted that generative AI could enable fraud losses to reach US$40 billion in the United States in 2027, up from US$12.3 billion in 2023.

Overcoming such fraud would be tough, Mr Wilson said.

âImagine hopping onto a Zoom call with what looks and sounds exactly like your chief financial officer, asking you to approve an urgent transfer. How many people would hesitate to comply,â he questioned, warningÌıthat scams will get bigger as deepfakes become easier to generate.

âAttackers wonât stop at one transaction. Theyâll orchestrate complex schemes where deepfakes impersonate auditors, executives and even regulators to legitimise their requests.âÌı

Mr Wilson added that his firm had detected a deepfake candidate during a hiring interview recently.

âThe voice sounded like it had walked out of a 1970s Godzilla movie â mechanical, misaligned and, frankly, a little eerie,â he said of the deepfake candidate. âThe giveaway was subtle, but in the future, these tricks will be seamless.â

Even smaller firms are also under âserious threatâ, the Association of Small and Medium Enterprises in Singapore told Â鶹´«Ã½ TODAY.

âThe informal nature in which small- and medium-sized enterprises (SMEs) often perform their financial processes makes them particularly susceptible to deepfakes,â it said.Ìı

âFor example, bosses may give a WhatsApp video call to their staff members to approve and send money to an unknown third party. Such informal processes make it easy for cybercriminals to impersonate higher management to request unauthorised financial transactions or confidential information, making traditional security measures insufficient.â

The association said that it has thus stepped up to educate SMEs on tackling cybersecurity threats such as through its Cyber Shield Series articles online and by organising AI Festival Asia next month.

In the meantime, MAS said that it is working with financial institutions to strengthen the resilience of multi-factor authentication measures.

It also released a paper in July this year to raise awareness about how deepfakes and other generative AI can pose as a threat, and publishes information through itsÌınational financial education programme MoneySense.

FUELLING SOCIAL DISORDER, DISTRUST IN GOVERNMENT

Experts also warned that governments around the world would face more threats as deepfake technology evolves.

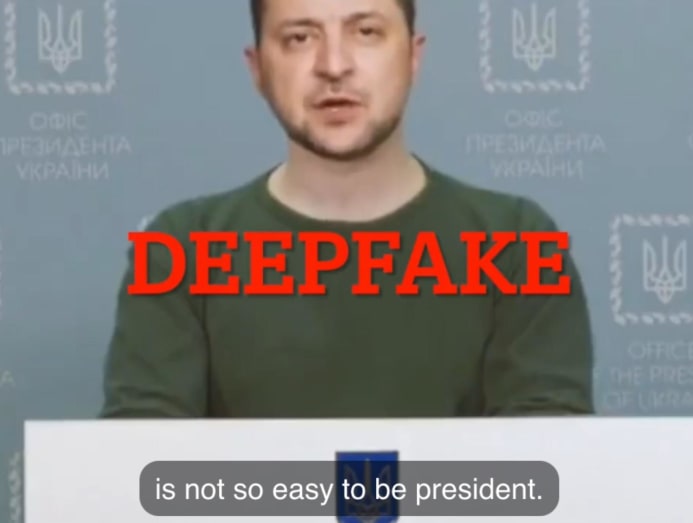

In 2022, a deepfake video made its rounds of Ukraine president Volodymyr Zelenskyy asking soldiers to surrender to Russia in the midst of an ongoing war between both countries.

Associate Professor Brian Lee from the Singapore University of Social Sciences (SUSS) said that malicious actors can use deepfakes to spread lies and disinformation about governments, damaging their reputations.

Assoc Prof Lee, who heads the communication programme at the School of Humanities and Behavioural Sciences, added:ÌıâEven if the authorities try to present the truth, people may not be able to distinguish truth from fake news subsequently. This is going to hurt the entire societal and political system in the long run.â

Mr Tan of Infosec in the City also warned that people might begin to question the authenticity of all audio-video content, even legitimate ones. This could lead to the spread of mistrust and the âliarâs dividendâ, where politicians could falsely claim that something is fake news.

Worse than that, Mr Tan warned, is that societies might fall into âinformation nihilismâ, where people question the authenticity of everything.Ìı

âThis could paralyse crisis responses during emergencies, destabilise democracies and fuel societal cynicism.â

For Singapore, the experts foresee that the upcoming General Election â which must be held by November 2025 â will be susceptible to deepfakes.

âDeepfakes can be weaponised to spread disinformation, fabricating videos or audio of candidates making inflammatory statements or engaging in unethical behaviour,â Asst Prof Lee from SUTD said.

âThese fake materials can influence voter perceptions and disrupt democratic processes, often surfacing too close to election day for proper verification.â

Recognising the threat, Singaporeâs parliament passed a bill on Oct 15 to ban deepfakes of candidates during elections.Ìı

Whether this and other existing laws to combat online misinformation will be enough to prevent nefarious actors from using deepfakes to interfere is impossible to predict, the analysts said.

As deepfakes evolve to be indistinguishable from the naked eye, Mr Tan said that legal and law enforcement sectors will have to deal with criminals complicating prosecutions by claiming video evidence as deepfakes.

âCourts, investigators and human resource teams will require deepfake detection tools, increasing operational costs while delaying decision-making and judicial processes,â Mr Tan added.

On this note, Mr Christopher Sia, director of the Sense-making and Surveillance Centre of Expertise at HTX, also known as Home Team Science and Technology Agency, said that the government agency has developed a deepfake detector called AlchemiX. This is being trialled by Home Team officers including the police.

The agency also works with academics to look into ideas that might potentially work in the future â for example, images that cannot be processed by deepfake tools â and with those in the industry, Mr Sia added.

NOT JUST MONETARY LOSSES FOR INDIVIDUALS

As deepfakes become more life-like, people will find it harder to differentiate AI-generated content from reality and thus become more susceptible to financial scams involving deepfakes.

With an audio recording and image, a family memberâs voice and likeness could be spoofed. A scammer could then video-call posing as the said family member before asking for money, for example.

Monetary loss is not the only danger. For example, with telemedicine becoming a growing norm, scammers could use deepfakes to target the healthcare system to get hold of personal information.

Mr Shanmuga of Denodo said that deviant actors could use deepfakes to pose as a doctor and prescribe the wrong medications to someone, or pose as a patient to get prescription drugs.

Another future concern is whether deepfakes could bypass biometric authentication systems. This is a threat in Singapore, where personal information is protected by such authentication systems on platforms, chief of which is Singpass,Ìıthe gateway to the personal identification data of Singapore residents.

The experts said softwares should in the future have multiple forms of authentication to ensure that biometric verification is not the only line of defence. For example, Singpass users have an ID and password to log in to services,Ìıand users receiveÌıSMS or emailÌınotificationsÌıwhen changes are made to theirÌıSingpass account.

Associate Professor Terence Sim fromÌıthe National University of Singapore (NUS) said that facial recognition is unlikely to be bypassed for now.

âA good (cybersecurity) software usually can ward against presentation attacks when it comes to visual cuesÌılike face recognition. These attacks include when someone uses a screenshot or photo of someone and puts it in front of a camera. The software is able to detect it."

Assoc Prof Sim, who isÌıwith the Centre for Trusted Internet Community at NUS, added that the challenge is when humans have to detect people based on visual cues. They can be âeasily trickedâ into giving access this way.

The creation of non-consensual sexual content using deepfakes will also become increasingly problematic as the technology becomes more accessible, allowing people to make such content of well-known personalities, friends and family with a click of a button at almost no monetary cost.

Graphika, a company that analyses social networks, found that there were more than 32 million visits globally to "nudify" or "undressing" online sites in September alone. Such sites often leverage deepfake technology to produce such non-consensual sexual content.

Ms Sugidha Nithiananthan, director of advisory and research at women's rights group AWARE, said: âUnfortunately, the reality is that there isnât much that an individual can do to protect themselves from someone with malicious intent.

âThe tools to create deepfake content are easily available online and can be used to create explicit images from just a professional headshot put up on a business website or a family photograph shared on social media.â

HOW TO GUARD AGAINST DEEPFAKES

The experts said that there are ways to reduce the risks at the individual level, and people should be cautious about what they post online.

Assoc Prof Sim of NUS said that some telltale signs of deepfakes are:

- Physical abnormalities such as flickering, obvious seams around the face and mismatched resolution between parts of an image

- Choppy and abrupt transitions in audio

- Inconsistencies such as a personâs eyes not blinking or blinking too much, inconsistent shadows and lip movement not matching audio

- Unnatural motion of hair or clothes

- The person is speaking about content they are unlikely to talk about in real life

Assoc Prof Sim added that people can try to do a reverse-image search online to figure out if an image is deepfaked. Reverse-image search engines such as Google or TinEye will look for similar images that have been uploaded on the internet previously, allowing users to find the original source of the image they have seen.

What about if someone is on a video conferencing call? He suggested issuing a challenge-response, for example, asking the person to raise their right hand or turn their head.Ìı

Another way is to quiz the person about something only both people might know such as when they last met.

The reality is that it is almost impossible to prevent someone from making a deepfake image of a person, considering how most people have some form of digital footprint.

Creating a tool that can detect deepfakes can be tricky and it is a never-ending arms race for detection to keep up with creation technology.

Detection tools and deepfake technology may be trained on different data sets, Mr Sia from HTX said. This can make it difficult for detection tools to accurately pinpoint deepfake content.

âAnother challenge today is that deepfakes are unique and may specialise in different âmethodsâ like lipsyncing, for example. The AI used in detection tools may be able to detect certain methods, but not others like, say, face swapping.âÌı

In an ideal situation with technological advances, it would be possible to make a "generalist" deepfake detector that can recognise any deepfake content with high accuracy, Mr Sia added.

Within the community,Ìıpeople who come across fraudulent AI content should be able to request takedowns of content using copyright and privacy laws, Mr Vinod said.

At the societal level,Ìıthere needs to be proper education to teach people the harms of non-consensual sexual content created by deepfakes,ÌıMs Sugidha of AWARE said.

Even though this is why there is an urgent need to regulate the use of deepfake technology, one of the root causes of the deepfake problem is the prevalence of misogyny in society at large, she added.

âIt is thus important that comprehensive sexuality education is taught in schools so that young people have a firm understanding of consent, gender-based violence and inequality, mutual respect and healthy relationships.â